clear gpu memory

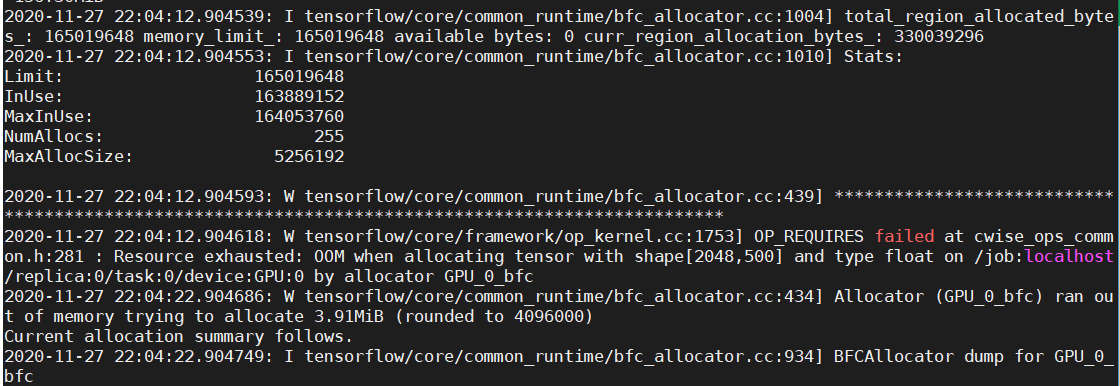

If you run tensorflow related programs on public gpu server with jupyter-notebook. After your network is trained, tensor data may still occupy a large amount of gpu memory(you can use ‘nvidia-smi’ command to check usage of gpu). Under these circumstances, GPUs are not computing at all, but other students can’t employ these Idle GPU.

Here is a easiest solution:

Here is a easiest solution:

from numba import cuda

cuda.select_device(0)

cuda.close()